The metadata streaming controversy in Next.js 15.1+

If you’ve upgraded to Next.js 15.2 recently and noticed your metadata mysteriously appearing at the bottom of your pages, you’re not alone. What Vercel pitched as a performance optimization has become one of the most contentious features in the framework’s history.

The silence from leadership is deafening. Guillermo Rauch, typically vocal about major Next.js features, hasn’t posted a single public defense of metadata streaming. Meanwhile, developers are scrambling to understand why their SEO is tanking and metadata is rendering outside the <head> tag.

After diving deep into the technical implementation, community reactions, and real-world impacts, I’ve uncovered a feature that reveals fundamental tensions about what modern web frameworks should prioritize. Spoiler alert: it’s not pretty.

The Official Story: Performance at What Cost?

The pitch sounds compelling. From the Next.js 15.2 release notes:

“It can often be necessary to fetch dynamic data, or perform some async operation, in generateMetadata. In prior versions of Next.js, this metadata needed to finish generating before the initial UI would be sent… This meant that for many pages where a fast initial UI was available, the initial paint was still delayed by data requirements that did not affect what the user would see visually.”

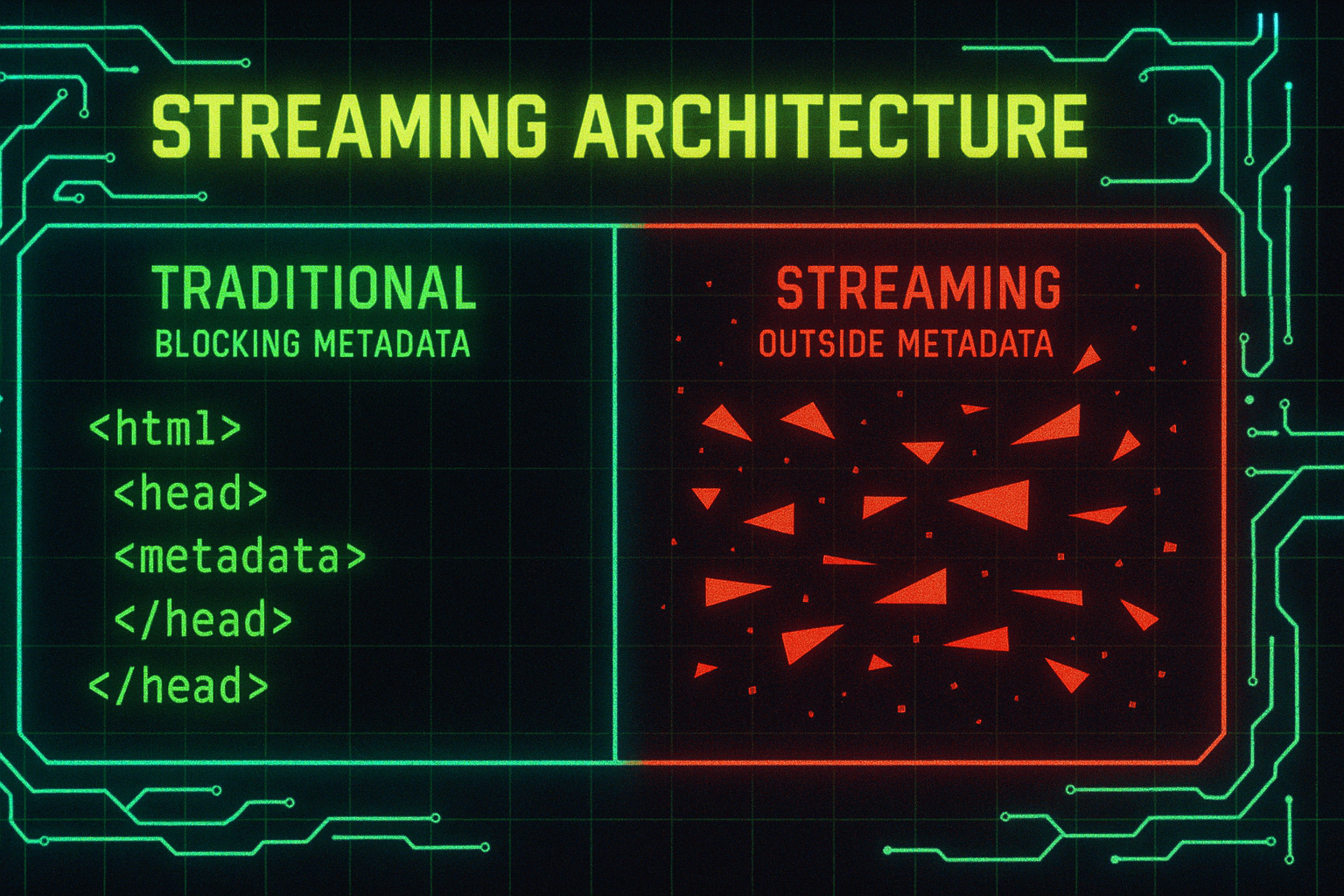

In theory, metadata streaming solves a real problem. Your users see content faster while metadata loads asynchronously in the background. For JavaScript-enabled crawlers like Googlebot, this works fine. For HTML-limited bots like Twitterbot, Next.js maintains blocking behavior.

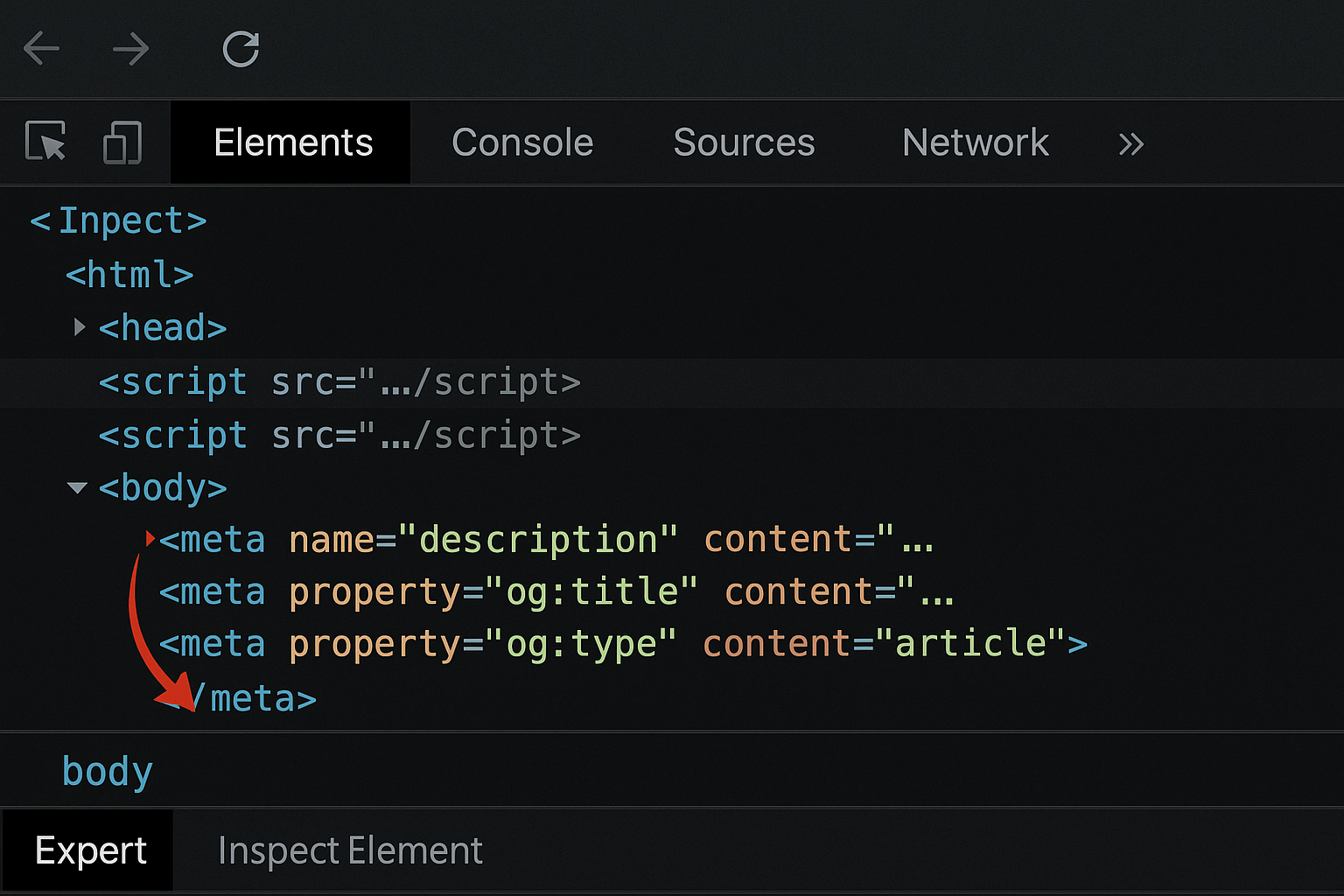

But here’s where theory meets reality: developers are reporting metadata appearing at the bottom of pages, below script tags, completely outside the <head> element. The feature that promised better performance delivered broken SEO instead.

Community Revolt: When “Optimization” Breaks Production

The Stack Overflow posts tell the real story. Developers describe “plummeting SEO impressions” and metadata that renders correctly on hard refresh but migrates outside the head on normal navigation. As one frustrated developer put it: “My metadata is literally at the bottom of the page below the script tags. How is this an improvement?” Another reported: “SEO tanked overnight after upgrading. Wish there had been a warning about this ‘feature.’”

The most damning feedback? Many discovered these breaking changes only after their sites were already in production.

One developer’s characterization cuts deep: “vendor lock-in disguised as an open-source framework.” They argue that static builds now require JavaScript execution for basic metadata functionality—a fundamental violation of progressive enhancement principles.

The technical complaints center on practical problems:

- Metadata renders outside

<head>tags - Inconsistent behavior between hard refresh and navigation

- No clear migration path or warnings

- Default-on status without obvious opt-out

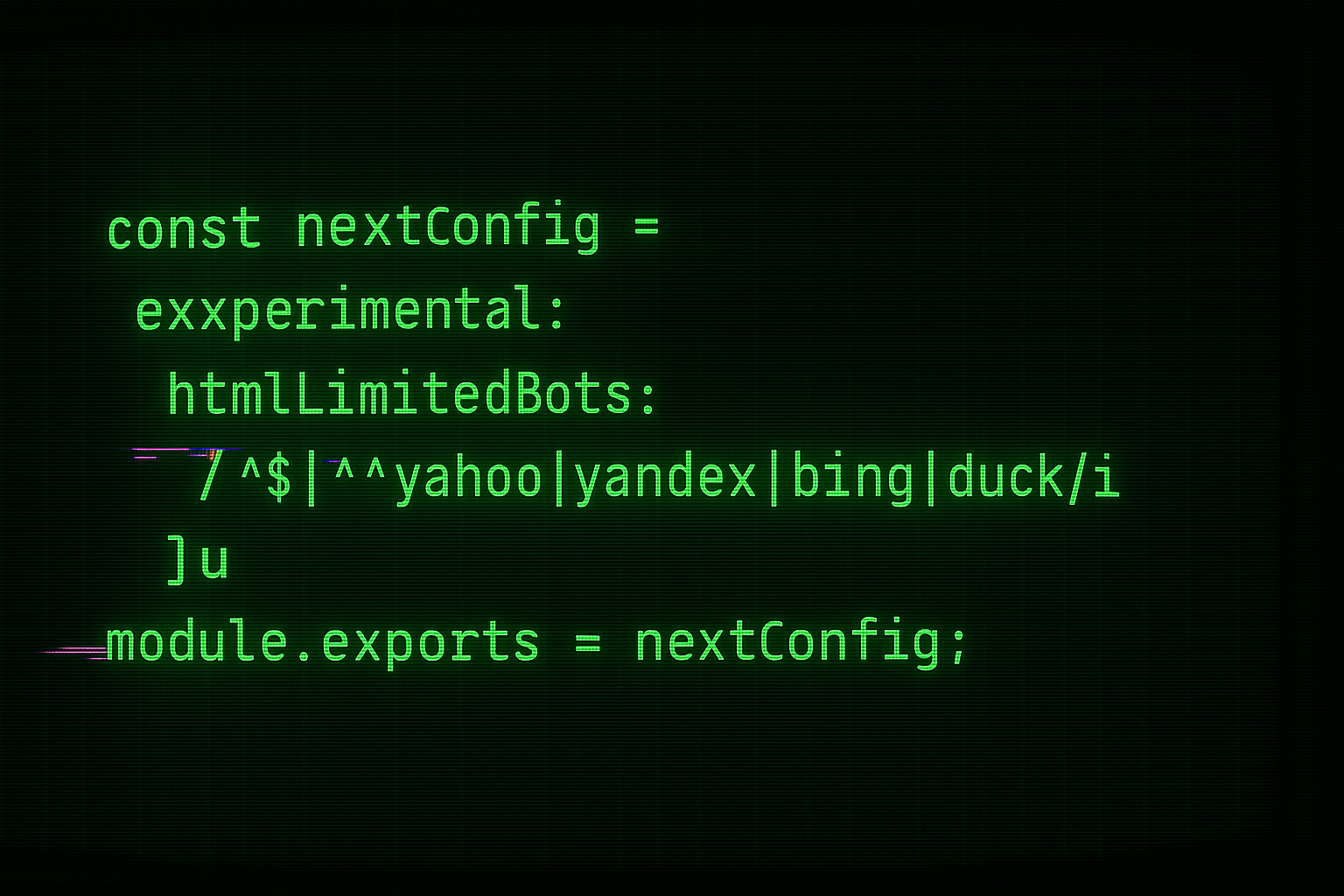

Multiple developers report being forced to manually configure htmlLimitedBots patterns just to restore expected behavior.

The only workaround requires manual configuration:

const config = {

htmlLimitedBots: /MyBot|OtherBot|SimpleCrawler/,

}

But this only controls which bots get blocking behavior—it doesn’t provide a complete opt-out for developers who simply want their metadata in the right place.

The Technical Deep Dive: Complexity for Edge Cases

The implementation reveals sophisticated engineering solving a narrow problem. Next.js now maintains dual rendering paths:

- Blocking metadata for HTML-limited bots

- Streaming metadata with JavaScript injection for capable clients

This dual-path approach adds significant complexity. The framework must:

- Detect bot capabilities through user agent sniffing

- Maintain separate rendering pipelines

- Handle hydration edge cases

- Manage potential race conditions

All this for what? The community consensus is clear: metadata generation is typically fast (<1KB) and rarely involves expensive operations. The performance gains target edge cases while adding complexity for everyone.

The philosophical divide runs deep:

Team Streaming argues:

- Solves real bottlenecks for metadata involving API calls

- Improves TTFB and FCP for metadata-heavy pages

- Pushes boundaries of SSR optimization

- Maintains backward compatibility through bot detection

Team Simplicity counters:

- Metadata is lightweight and fast by design

- Complexity outweighs edge-case benefits

- Violates HTML semantics (metadata belongs in

<head>) - Creates debugging nightmares

The Missing Evidence: Where Are the Benchmarks?

For a feature justified entirely by performance, the absence of concrete benchmarks is striking. Vercel reports:

- 30% memory reduction during development (but for Turbopack, not metadata streaming)

- Qualitative claims of “immediately cuts down on time”

- Testimonials from companies about general SSR improvements

But where are the A/B tests? The TTFB comparisons? The real-world impact measurements?

Without data, we’re left with anecdotal evidence: developers claiming SEO disasters versus Vercel claiming performance wins. This isn’t how major framework changes should be evaluated.

Limited Escape Hatches: Configuration That Frustrates

The only official configuration is the htmlLimitedBots regex pattern. No complete opt-out. No per-route control. No loading states for metadata generation.

Community proposals reveal what developers actually want:

- Complete disable flag for metadata streaming

- Per-route streaming configuration

- Better default bot detection

- Built-in loading states

The most common workaround? Avoiding generateMetadata() entirely in favor of static metadata objects. This naturally bypasses streaming but defeats the purpose of dynamic metadata generation.

// Instead of this (streaming enabled)

export async function generateMetadata() {

const data = await fetch('/api/metadata')

return {

title: data.title,

description: data.description

}

}

// Developers use this (streaming bypassed)

export const metadata = {

title: 'Static Title',

description: 'Static Description'

}

When Streaming Actually Makes Sense (Hint: Rarely)

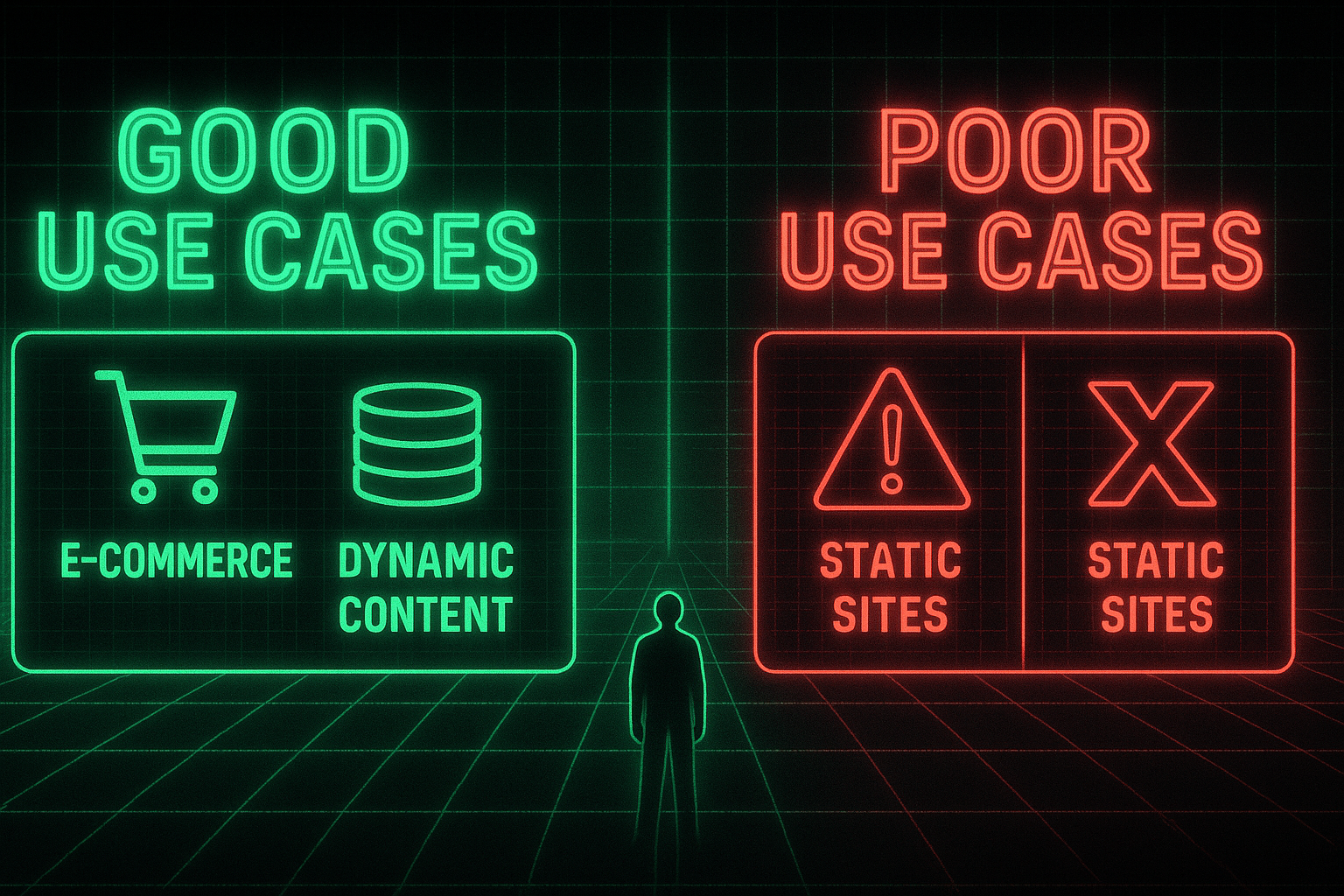

Let’s be fair. There are legitimate use cases for metadata streaming:

Justified scenarios:

- E-commerce with database lookups for product titles

- Blogs generating Open Graph images on-demand

- Applications with genuinely expensive metadata operations (>100ms)

- High-traffic sites where every millisecond matters

Poor fit scenarios:

- Static or mostly-static sites

- Simple metadata requirements

- SEO-critical pages needing predictable behavior

- Teams prioritizing simplicity

The pattern is clear: metadata streaming solves real problems for a small subset of applications while adding complexity for everyone else.

Framework Philosophy: Next.js vs. The World

Other frameworks take refreshingly simple approaches:

- Remix: Traditional SSR with metadata always in initial HTML

- Nuxt.js: Built-in head management without streaming complexity

- SvelteKit: Flexible rendering with granular control

These frameworks prioritize predictable behavior and developer experience over micro-optimizations. They recognize that not every performance improvement is worth the complexity cost.

Next.js increasingly optimizes for sophisticated edge cases while assuming teams have deep framework knowledge. Features like ISR, Edge Runtime, and now metadata streaming require understanding complex implementation details just to debug basic issues.

The Deeper Pattern: Innovation vs. Stability

This controversy represents more than a technical disagreement. It’s a fundamental debate about framework philosophy—and potentially, a deliberate strategy.

Consider this alongside Next.js’s other Vercel-optimized features: ISR works best on Vercel, Edge Runtime has platform-specific limitations, and now metadata streaming adds another layer of complexity that “just works” on their infrastructure. Each feature, individually defensible, collectively creates a framework that’s technically portable but practically dependent on Vercel’s platform.

The pattern is subtle but consistent:

Innovation-first approach (Next.js):

- Push technical boundaries

- Optimize for cutting-edge use cases

- Accept complexity for performance gains

- Move fast, document later

Stability-first approach (alternatives):

- Predictable behavior by default

- Optimize for common use cases

- Reject complexity without clear benefits

- Change slowly, communicate clearly

Neither approach is inherently wrong. But Next.js’s shift toward complexity without clear communication creates friction for teams who chose the framework for its original simplicity.

The Silence That Speaks Volumes

Perhaps most telling is what’s missing: strong public advocacy from Vercel leadership. Guillermo Rauch, who enthusiastically promotes features like Server Components and Partial Pre-rendering, hasn’t made a single public statement defending metadata streaming.

This silence suggests even Vercel views this as a technical optimization rather than a transformative feature. Yet it’s enabled by default, breaking production sites without warning.

The lack of benchmarks, limited configuration options, and absent leadership advocacy paint a picture of a feature that solved an internal problem but wasn’t ready for public consumption.

Practical Recommendations: Navigating the Mess

For teams using Next.js 15.2+, here’s your survival guide:

1. Measure before assuming you need streaming

export async function generateMetadata() {

const start = Date.now()

// Your metadata logic here

console.log(`Metadata generation: ${Date.now() - start}ms`)

}

If generation takes <100ms, streaming adds unnecessary complexity.

2. Configure bot detection carefully

// Expand the default pattern for your needs

const config = {

htmlLimitedBots: /Twitterbot|Discordbot|Slackbot|LinkedInBot|WhatsApp|Telegram/i,

}

3. Consider static metadata for critical pages SEO-critical pages shouldn’t be experiments. Use static metadata for homepage, landing pages, and high-traffic content.

4. Implement monitoring Track metadata rendering positions and SEO performance. Don’t wait for users to report issues.

5. Have a rollback plan Keep Next.js 14.x in your back pocket. Some teams report staying on 15.1.7 to avoid streaming entirely.

The Verdict: A Feature in Search of a Problem

Metadata streaming exemplifies a concerning trend in Next.js development: sophisticated solutions to edge-case problems becoming default behavior without clear communication or easy opt-outs.

For the small subset of applications with genuinely expensive metadata operations, streaming provides real value. For everyone else, it’s complexity without benefit—and potentially with significant downside.

The framework that once prioritized developer experience now requires deep technical knowledge just to ensure metadata renders in the correct location. That’s not progress.

My recommendation? Measure your actual metadata performance before accepting streaming’s complexity. If your metadata generates quickly (and it probably does), the traditional approach remains simpler, more predictable, and less likely to tank your SEO.

The Next.js team would do well to remember: not every optimization is an improvement. Sometimes boring is better. And metadata? That should definitely be boring.